:~$ python3

Python 3.6.9 (default, Nov 7 2019, 10:44:02)

[GCC 8.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from keras.datasets import imdb

/usr/lib/python3/dist-packages/h5py/__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

Using TensorFlow backend.

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:516: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint8 = np.dtype([("qint8", np.int8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:517: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint8 = np.dtype([("quint8", np.uint8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:518: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint16 = np.dtype([("qint16", np.int16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:519: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint16 = np.dtype([("quint16", np.uint16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:520: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint32 = np.dtype([("qint32", np.int32, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:525: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

np_resource = np.dtype([("resource", np.ubyte, 1)])

/usr/local/lib/python3.6/dist-packages/tensorboard/compat/tensorflow_stub/dtypes.py:541: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint8 = np.dtype([("qint8", np.int8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorboard/compat/tensorflow_stub/dtypes.py:542: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint8 = np.dtype([("quint8", np.uint8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorboard/compat/tensorflow_stub/dtypes.py:543: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint16 = np.dtype([("qint16", np.int16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorboard/compat/tensorflow_stub/dtypes.py:544: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint16 = np.dtype([("quint16", np.uint16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorboard/compat/tensorflow_stub/dtypes.py:545: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint32 = np.dtype([("qint32", np.int32, 1)])

/usr/local/lib/python3.6/dist-packages/tensorboard/compat/tensorflow_stub/dtypes.py:550: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

np_resource = np.dtype([("resource", np.ubyte, 1)])

>>> (train_data, train_labels), (test_data, test_labels) = imdb.load_data(num_words=10000)

Downloading data from https://s3.amazonaws.com/text-datasets/imdb.npz

17465344/17464789 [==============================] - 14s 1us/step

>>> train_data[0]

[1, 14, 22, 16, 43, 530, 973, 1622, 1385, 65, 458, 4468, 66, 3941, 4, 173, 36, 256, 5, 25, 100, 43, 838, 112, 50, 670, 2, 9, 35, 480, 284, 5, 150, 4, 172, 112, 167, 2, 336, 385, 39, 4, 172, 4536, 1111, 17, 546, 38, 13, 447, 4, 192, 50, 16, 6, 147, 2025, 19, 14, 22, 4, 1920, 4613, 469, 4, 22, 71, 87, 12, 16, 43, 530, 38, 76, 15, 13, 1247, 4, 22, 17, 515, 17, 12, 16, 626, 18, 2, 5, 62, 386, 12, 8, 316, 8, 106, 5, 4, 2223, 5244, 16, 480, 66, 3785, 33, 4, 130, 12, 16, 38, 619, 5, 25, 124, 51, 36, 135, 48, 25, 1415, 33, 6, 22, 12, 215, 28, 77, 52, 5, 14, 407, 16, 82, 2, 8, 4, 107, 117, 5952, 15, 256, 4, 2, 7, 3766, 5, 723, 36, 71, 43, 530, 476, 26, 400, 317, 46, 7, 4, 2, 1029, 13, 104, 88, 4, 381, 15, 297, 98, 32, 2071, 56, 26, 141, 6, 194, 7486, 18, 4, 226, 22, 21, 134, 476, 26, 480, 5, 144, 30, 5535, 18, 51, 36, 28, 224, 92, 25, 104, 4, 226, 65, 16, 38, 1334, 88, 12, 16, 283, 5, 16, 4472, 113, 103, 32, 15, 16, 5345, 19, 178, 32]

>>> train_labels[0]

1

>>> max([max(sequence) for sequence in train_data])

9999

>>> word_index = imdb.get_word_index()

Downloading data from https://s3.amazonaws.com/text-datasets/imdb_word_index.json

1646592/1641221 [==============================] - 3s 2us/step

>>> reverse_word_index = dict([(value, key) for (key, value) in word_index.items()])

>>> decode_review = ' '.join([reverse_word_index.get(i - 3, '?') for i in train_data[0]])

>>> decoded_review

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

NameError: name 'decoded_review' is not defined

>>> decode_review

"? this film was just brilliant casting location scenery story direction everyone's really suited the part they played and you could just imagine being there robert ? is an amazing actor and now the same being director ? father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for ? and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and this definitely was also ? to the two little boy's that played the ? of norman and paul they were just brilliant children are often left out of the ? list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don't you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all"

>>> import numpy as np

>>> def vectorize_sequences(sequences, dimension=10000):

... results = np.zeros((len(sequences),dimension))

... for i, sequence in enumerate(sequences):

... results[i, sequence] =1.

... return results

...

>>> x_train = vectorize_sequences(train_data)

>>> x_test = vectorize_sequences(test_data)

>>> x_train[0]

array([0., 1., 1., ..., 0., 0., 0.])

>>> y_train = np.asarray(train_labels).astype('float32')

>>> y_test = np.asarray(test_labels).astype('float32')

>>> from keras import models

>>> from keras import layers

>>> model = models.Sequential()

>>> model.add(layers.Dense(16, activation='relu', input_shape=(10000,)))

>>> model.add(layers.Dense(16, activation='relu'))

>>> model.add(layers.Dense(1, activation='sigmoid'))

>>> model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['accuracy'])

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/nn_impl.py:180: add_dispatch_support.<locals>.wrapper (from tensorflow.python.ops.array_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.where in 2.0, which has the same broadcast rule as np.where

>>> x_val = x_train[:10000]

>>> partial_x_train = x_train[10000:]

>>> y_val = y_train[:10000]

>>> partial_y_train = y_train[10000:]

>>> model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['acc'])

>>> history = model.fit(partial_x_train, partial_y_train, epochs=20, batch_size=512, validation_data=(x_val, y_val))

2020-02-09 18:04:15.573627: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2020-02-09 18:04:15.591352: I tensorflow/stream_executor/platform/default/dso_loader.cc:42] Successfully opened dynamic library libcuda.so.1

2020-02-09 18:04:15.592341: E tensorflow/stream_executor/cuda/cuda_driver.cc:318] failed call to cuInit: CUDA_ERROR_SYSTEM_DRIVER_MISMATCH: system has unsupported display driver / cuda driver combination

2020-02-09 18:04:15.592388: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:169] retrieving CUDA diagnostic information for host: kkuro-E5800-T110f-E

2020-02-09 18:04:15.592399: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:176] hostname: kkuro-E5800-T110f-E

2020-02-09 18:04:15.592530: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:200] libcuda reported version is: 440.59.0

2020-02-09 18:04:15.592562: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:204] kernel reported version is: 440.48.2

2020-02-09 18:04:15.592573: E tensorflow/stream_executor/cuda/cuda_diagnostics.cc:313] kernel version 440.48.2 does not match DSO version 440.59.0 -- cannot find working devices in this configuration

2020-02-09 18:04:15.729381: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 3092885000 Hz

2020-02-09 18:04:15.738386: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0xa13df70 executing computations on platform Host. Devices:

2020-02-09 18:04:15.738459: I tensorflow/compiler/xla/service/service.cc:175] StreamExecutor device (0): <undefined>, <undefined>

2020-02-09 18:04:15.883609: W tensorflow/compiler/jit/mark_for_compilation_pass.cc:1412] (One-time warning): Not using XLA:CPU for cluster because envvar TF_XLA_FLAGS=--tf_xla_cpu_global_jit was not set. If you want XLA:CPU, either set that envvar, or use experimental_jit_scope to enable XLA:CPU. To confirm that XLA is active, pass --vmodule=xla_compilation_cache=1 (as a proper command-line flag, not via TF_XLA_FLAGS) or set the envvar XLA_FLAGS=--xla_hlo_profile.

WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/keras/backend/tensorflow_backend.py:422: The name tf.global_variables is deprecated. Please use tf.compat.v1.global_variables instead.

Train on 15000 samples, validate on 10000 samples

Epoch 1/20

512/15000 [>.............................] - ETA: 10s - loss: 0.6936 - acc: 0. 1536/15000 [==>...........................] - ETA: 3s - loss: 0.6820 - acc: 0.5 3072/15000 [=====>........................] - ETA: 1s - loss: 0.6504 - acc: 0.6 4608/15000 [========>.....................] - ETA: 1s - loss: 0.6226 - acc: 0.6 6144/15000 [===========>..................] - ETA: 0s - loss: 0.5979 - acc: 0.7 7168/15000 [=============>................] - ETA: 0s - loss: 0.5834 - acc: 0.7 8704/15000 [================>.............] - ETA: 0s - loss: 0.5644 - acc: 0.710240/15000 [===================>..........] - ETA: 0s - loss: 0.5449 - acc: 0.711776/15000 [======================>.......] - ETA: 0s - loss: 0.5276 - acc: 0.712800/15000 [========================>.....] - ETA: 0s - loss: 0.5176 - acc: 0.714336/15000 [===========================>..] - ETA: 0s - loss: 0.5047 - acc: 0.715000/15000 [==============================] - 1s 96us/step - loss: 0.4989 - acc: 0.7990 - val_loss: 0.3895 - val_acc: 0.8521

Epoch 2/20

512/15000 [>.............................] - ETA: 0s - loss: 0.3523 - acc: 0.8 2048/15000 [===>..........................] - ETA: 0s - loss: 0.3424 - acc: 0.8 3584/15000 [======>.......................] - ETA: 0s - loss: 0.3343 - acc: 0.8 5120/15000 [=========>....................] - ETA: 0s - loss: 0.3295 - acc: 0.8 6656/15000 [============>.................] - ETA: 0s - loss: 0.3250 - acc: 0.8 8192/15000 [===============>..............] - ETA: 0s - loss: 0.3193 - acc: 0.8 9728/15000 [==================>...........] - ETA: 0s - loss: 0.3166 - acc: 0.811264/15000 [=====================>........] - ETA: 0s - loss: 0.3121 - acc: 0.812800/15000 [========================>.....] - ETA: 0s - loss: 0.3074 - acc: 0.814336/15000 [===========================>..] - ETA: 0s - loss: 0.3026 - acc: 0.815000/15000 [==============================] - 1s 60us/step - loss: 0.3011 - acc: 0.8980 - val_loss: 0.3204 - val_acc: 0.8742

Epoch 3/20

512/15000 [>.............................] - ETA: 0s - loss: 0.2231 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.2363 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.2362 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.2305 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.2272 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.2249 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.2263 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.2234 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.2210 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.2224 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.2227 - acc: 0.9269 - val_loss: 0.2949 - val_acc: 0.8825

Epoch 4/20

512/15000 [>.............................] - ETA: 0s - loss: 0.2064 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.1824 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.1813 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.1775 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.1725 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.1758 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.1761 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.1746 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.1742 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.1743 - acc: 0.915000/15000 [==============================] - 1s 62us/step - loss: 0.1769 - acc: 0.9413 - val_loss: 0.2784 - val_acc: 0.8900

Epoch 5/20

512/15000 [>.............................] - ETA: 0s - loss: 0.1694 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.1491 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.1454 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.1404 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.1407 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.1404 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.1421 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.1400 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.1401 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.1427 - acc: 0.915000/15000 [==============================] - 1s 60us/step - loss: 0.1429 - acc: 0.9542 - val_loss: 0.2769 - val_acc: 0.8894

Epoch 6/20

512/15000 [>.............................] - ETA: 0s - loss: 0.1140 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.1128 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.1098 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.1139 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.1152 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.1160 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.1150 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.1154 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.1167 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.1198 - acc: 0.915000/15000 [==============================] - 1s 62us/step - loss: 0.1204 - acc: 0.9624 - val_loss: 0.2901 - val_acc: 0.8873

Epoch 7/20

512/15000 [>.............................] - ETA: 0s - loss: 0.1014 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0914 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0904 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0928 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0924 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0922 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0981 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0978 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0979 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0986 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0976 - acc: 0.9714 - val_loss: 0.3057 - val_acc: 0.8846

Epoch 8/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0633 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0676 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0700 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0747 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0742 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0789 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0785 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0786 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0787 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0802 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0811 - acc: 0.9777 - val_loss: 0.3367 - val_acc: 0.8823

Epoch 9/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0877 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0710 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0641 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0635 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0613 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0629 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0653 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0672 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0674 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0682 - acc: 0.915000/15000 [==============================] - 1s 60us/step - loss: 0.0681 - acc: 0.9809 - val_loss: 0.3593 - val_acc: 0.8772

Epoch 10/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0630 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0468 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0513 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0556 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0548 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0555 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0554 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0555 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0575 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0584 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0581 - acc: 0.9850 - val_loss: 0.3755 - val_acc: 0.8761

Epoch 11/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0474 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0390 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0370 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0394 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0406 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0397 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0408 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0445 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0454 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0448 - acc: 0.915000/15000 [==============================] - 1s 60us/step - loss: 0.0448 - acc: 0.9894 - val_loss: 0.3992 - val_acc: 0.8777

Epoch 12/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0336 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0314 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0328 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0330 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0325 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0338 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0367 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0371 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0371 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0373 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0378 - acc: 0.9915 - val_loss: 0.4584 - val_acc: 0.8677

Epoch 13/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0358 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0280 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0266 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0265 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0260 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0257 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0261 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0298 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0295 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0296 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0296 - acc: 0.9942 - val_loss: 0.4602 - val_acc: 0.8744

Epoch 14/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0186 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0189 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0188 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0185 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0188 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0193 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0198 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0214 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0248 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0248 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0249 - acc: 0.9954 - val_loss: 0.4959 - val_acc: 0.8729

Epoch 15/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0154 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0139 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0139 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0139 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0153 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0153 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0152 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0157 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0169 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0188 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0191 - acc: 0.9970 - val_loss: 0.5268 - val_acc: 0.8717

Epoch 16/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0097 - acc: 1.0 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0092 - acc: 1.0 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0109 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0108 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0109 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0109 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0112 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0123 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0134 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0146 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0150 - acc: 0.9983 - val_loss: 0.5586 - val_acc: 0.8688

Epoch 17/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0094 - acc: 1.0 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0091 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0097 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0091 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0090 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0088 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0089 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0091 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0111 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0121 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0121 - acc: 0.9985 - val_loss: 0.6028 - val_acc: 0.8654

Epoch 18/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0057 - acc: 1.0 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0078 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0069 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0070 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0071 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0071 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0073 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0072 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0074 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0084 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0092 - acc: 0.9989 - val_loss: 0.6289 - val_acc: 0.8659

Epoch 19/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0056 - acc: 1.0 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0064 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0060 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0057 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0054 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0055 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0054 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0054 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0054 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0054 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0055 - acc: 0.9999 - val_loss: 0.7878 - val_acc: 0.8475

Epoch 20/20

512/15000 [>.............................] - ETA: 0s - loss: 0.0142 - acc: 0.9 2048/15000 [===>..........................] - ETA: 0s - loss: 0.0088 - acc: 0.9 3584/15000 [======>.......................] - ETA: 0s - loss: 0.0067 - acc: 0.9 5120/15000 [=========>....................] - ETA: 0s - loss: 0.0058 - acc: 0.9 6656/15000 [============>.................] - ETA: 0s - loss: 0.0056 - acc: 0.9 8192/15000 [===============>..............] - ETA: 0s - loss: 0.0053 - acc: 0.9 9728/15000 [==================>...........] - ETA: 0s - loss: 0.0051 - acc: 0.911264/15000 [=====================>........] - ETA: 0s - loss: 0.0050 - acc: 0.912800/15000 [========================>.....] - ETA: 0s - loss: 0.0058 - acc: 0.914336/15000 [===========================>..] - ETA: 0s - loss: 0.0094 - acc: 0.915000/15000 [==============================] - 1s 61us/step - loss: 0.0091 - acc: 0.9979 - val_loss: 0.7043 - val_acc: 0.8644

>>> history_dict= history.history

>>> history_dict.keys()

dict_keys(['val_loss', 'val_acc', 'loss', 'acc'])

>>> import matplotlib.pyplot as plt

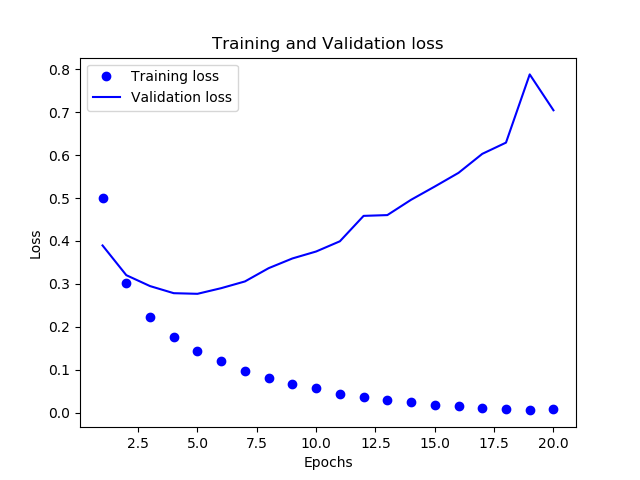

>>> loss_values=history_dict['loss']

>>> val_loss_values=history_dict['val_loss']

>>> epochs = range(1,len(loss_values) + 1)

>>> plt.plot(epochs, loss_values, 'bo', label='Training loss')

[<matplotlib.lines.Line2D object at 0x7f94904d43c8>]

>>> plt.plot(epochs, val_loss_values, 'b', label='Validation loss')

[<matplotlib.lines.Line2D object at 0x7f95d8c3ccc0>]

>>> plt.title('Training and Validation loss')

Text(0.5,1,'Training and Validation loss')

>>> plt.xlabel('Epochs')

Text(0.5,0,'Epochs')

>>> plt.ylabel('Loss')

Text(0,0.5,'Loss')

>>> plt.legend()

<matplotlib.legend.Legend object at 0x7f94904d4dd8>

>>> plt.show()

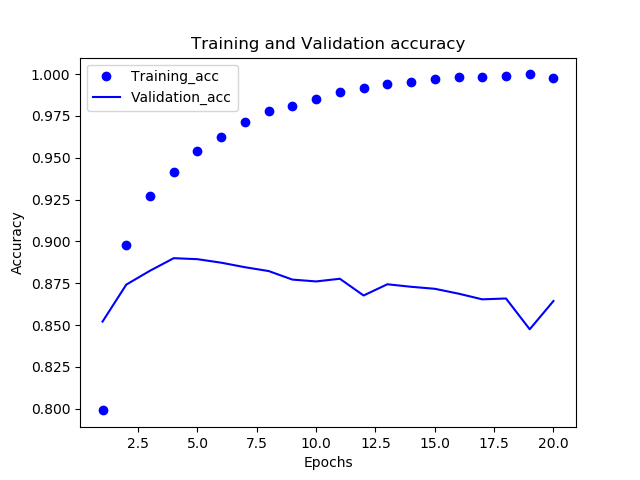

>>> acc = history_dict['acc']

>>> val_acc = history_dict['val_acc']

>>> plt.plot(epochs, acc, 'bo', label='Training_acc')

[<matplotlib.lines.Line2D object at 0x7f948c4115c0>]

>>> plt.plot(epochs, val_acc, 'b', label='Validation_acc')

[<matplotlib.lines.Line2D object at 0x7f949264b3c8>]

>>> plt.title('Training and Validation accuracy')

Text(0.5,1,'Training and Validation accuracy')

>>> plt.xlabel('Epochs')

Text(0.5,0,'Epochs')

>>> plt.ylabel('Accuracy')

Text(0,0.5,'Accuracy')

>>> plt.legend()

<matplotlib.legend.Legend object at 0x7f948c411fd0>

>>> plt.show()

確かにEpoch4を過ぎると損失率(loss)は上昇し、成功率(Accuracy)も低下する。これが過学習ということらしい。

ということでEpochs=4にしてパラメータをいろいろ弄ったときにどうなるかを見てみる。

>>> model = models.Sequential()

>>> model.add(layers.Dense(16, activation='relu', input_shape=(10000,)))

>>> model.add(layers.Dense(16, activation='relu'))

>>> model.add(layers.Dense(1, activation='sigmoid'))

>>> model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['accuracy'])

>>> model.fit(x_train, y_train, epochs=4, batch_size=512)

Epoch 1/4

512/25000 [..............................] - ETA: 4s - loss: 0.6888 - accuracy 1536/25000 [>.............................] - ETA: 2s - loss: 0.6763 - accuracy 3072/25000 [==>...........................] - ETA: 1s - loss: 0.6539 - accuracy 4608/25000 [====>.........................] - ETA: 1s - loss: 0.6258 - accuracy 6144/25000 [======>.......................] - ETA: 1s - loss: 0.6000 - accuracy 7680/25000 [========>.....................] - ETA: 0s - loss: 0.5756 - accuracy 9216/25000 [==========>...................] - ETA: 0s - loss: 0.5538 - accuracy10752/25000 [===========>..................] - ETA: 0s - loss: 0.5425 - accuracy12288/25000 [=============>................] - ETA: 0s - loss: 0.5248 - accuracy13824/25000 [===============>..............] - ETA: 0s - loss: 0.5098 - accuracy15360/25000 [=================>............] - ETA: 0s - loss: 0.4961 - accuracy16896/25000 [===================>..........] - ETA: 0s - loss: 0.4854 - accuracy18432/25000 [=====================>........] - ETA: 0s - loss: 0.4739 - accuracy19968/25000 [======================>.......] - ETA: 0s - loss: 0.4653 - accuracy21504/25000 [========================>.....] - ETA: 0s - loss: 0.4570 - accuracy23040/25000 [==========================>...] - ETA: 0s - loss: 0.4488 - accuracy24576/25000 [============================>.] - ETA: 0s - loss: 0.4413 - accuracy25000/25000 [==============================] - 1s 47us/step - loss: 0.4394 - accuracy: 0.8197

Epoch 2/4

512/25000 [..............................] - ETA: 1s - loss: 0.2519 - accuracy 2048/25000 [=>............................] - ETA: 1s - loss: 0.2655 - accuracy 3584/25000 [===>..........................] - ETA: 0s - loss: 0.2662 - accuracy 5120/25000 [=====>........................] - ETA: 0s - loss: 0.2647 - accuracy 6656/25000 [======>.......................] - ETA: 0s - loss: 0.2632 - accuracy 8192/25000 [========>.....................] - ETA: 0s - loss: 0.2604 - accuracy 9728/25000 [==========>...................] - ETA: 0s - loss: 0.2571 - accuracy11264/25000 [============>.................] - ETA: 0s - loss: 0.2628 - accuracy12800/25000 [==============>...............] - ETA: 0s - loss: 0.2600 - accuracy14336/25000 [================>.............] - ETA: 0s - loss: 0.2579 - accuracy15872/25000 [==================>...........] - ETA: 0s - loss: 0.2569 - accuracy17408/25000 [===================>..........] - ETA: 0s - loss: 0.2574 - accuracy18944/25000 [=====================>........] - ETA: 0s - loss: 0.2550 - accuracy20480/25000 [=======================>......] - ETA: 0s - loss: 0.2549 - accuracy22016/25000 [=========================>....] - ETA: 0s - loss: 0.2543 - accuracy23552/25000 [===========================>..] - ETA: 0s - loss: 0.2532 - accuracy25000/25000 [==============================] - 1s 43us/step - loss: 0.2525 - accuracy: 0.9104

Epoch 3/4

512/25000 [..............................] - ETA: 1s - loss: 0.2105 - accuracy 2048/25000 [=>............................] - ETA: 0s - loss: 0.1823 - accuracy 3072/25000 [==>...........................] - ETA: 1s - loss: 0.1813 - accuracy 4608/25000 [====>.........................] - ETA: 0s - loss: 0.1817 - accuracy 6144/25000 [======>.......................] - ETA: 0s - loss: 0.1990 - accuracy 7680/25000 [========>.....................] - ETA: 0s - loss: 0.1989 - accuracy 9216/25000 [==========>...................] - ETA: 0s - loss: 0.1956 - accuracy10752/25000 [===========>..................] - ETA: 0s - loss: 0.1985 - accuracy12288/25000 [=============>................] - ETA: 0s - loss: 0.1991 - accuracy13824/25000 [===============>..............] - ETA: 0s - loss: 0.1961 - accuracy15360/25000 [=================>............] - ETA: 0s - loss: 0.1961 - accuracy16896/25000 [===================>..........] - ETA: 0s - loss: 0.1955 - accuracy18432/25000 [=====================>........] - ETA: 0s - loss: 0.1950 - accuracy19968/25000 [======================>.......] - ETA: 0s - loss: 0.1942 - accuracy21504/25000 [========================>.....] - ETA: 0s - loss: 0.1946 - accuracy23040/25000 [==========================>...] - ETA: 0s - loss: 0.1953 - accuracy24576/25000 [============================>.] - ETA: 0s - loss: 0.1961 - accuracy25000/25000 [==============================] - 1s 44us/step - loss: 0.1960 - accuracy: 0.9292

Epoch 4/4

512/25000 [..............................] - ETA: 1s - loss: 0.1593 - accuracy 2048/25000 [=>............................] - ETA: 0s - loss: 0.1431 - accuracy 3584/25000 [===>..........................] - ETA: 0s - loss: 0.1434 - accuracy 5120/25000 [=====>........................] - ETA: 0s - loss: 0.1454 - accuracy 6656/25000 [======>.......................] - ETA: 0s - loss: 0.1475 - accuracy 8192/25000 [========>.....................] - ETA: 0s - loss: 0.1570 - accuracy 9728/25000 [==========>...................] - ETA: 0s - loss: 0.1575 - accuracy11264/25000 [============>.................] - ETA: 0s - loss: 0.1587 - accuracy12800/25000 [==============>...............] - ETA: 0s - loss: 0.1563 - accuracy14336/25000 [================>.............] - ETA: 0s - loss: 0.1597 - accuracy15872/25000 [==================>...........] - ETA: 0s - loss: 0.1609 - accuracy17408/25000 [===================>..........] - ETA: 0s - loss: 0.1620 - accuracy18944/25000 [=====================>........] - ETA: 0s - loss: 0.1635 - accuracy20480/25000 [=======================>......] - ETA: 0s - loss: 0.1636 - accuracy22016/25000 [=========================>....] - ETA: 0s - loss: 0.1634 - accuracy23552/25000 [===========================>..] - ETA: 0s - loss: 0.1639 - accuracy25000/25000 [==============================] - 1s 42us/step - loss: 0.1636 - accuracy: 0.9417

<keras.callbacks.callbacks.History object at 0x7f948c3d0b00>

>>> results = model.evaluate(x_test, y_test)

25000/25000 [==============================] - 1s 52us/step

>>> results

[0.29960916100025176, 0.8816400170326233]

>>> model.predict(x_test)

array([[0.18844399],

[0.9997899 ],

[0.8822979 ],

...,

[0.14371884],

[0.08577126],

[0.62207 ]], dtype=float32)

>>> model = models.Sequential()

>>> model.add(layers.Dense(16, activation='relu', input_shape=(10000,)))

>>> model.add(layers.Dense(1, activation='sigmoid'))

>>> model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['accuracy'])

>>> model.fit(x_train, y_train, epochs=4, batch_size=512)

Epoch 1/4

・・・・

<keras.callbacks.callbacks.History object at 0x7f9491b66160>

>>> results = model.evaluate(x_test, y_test)

25000/25000 [==============================] - 1s 42us/step

>>> results

[0.30649682688713076, 0.880840003490448]

>>> model = models.Sequential()

>>> model.add(layers.Dense(32, activation='relu', input_shape=(10000,)))

>>> model.add(layers.Dense(32, activation='relu'))

>>> model.add(layers.Dense(1, activation='sigmoid'))

>>> model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['accuracy'])

>>> model.fit(x_train, y_train, epochs=4, batch_size=512)

Epoch 1/4

・・・・・

<keras.callbacks.callbacks.History object at 0x7f948bf58978>

>>> results = model.evaluate(x_test, y_test)

25000/25000 [==============================] - 1s 48us/step

>>> results

[0.31329001052379607, 0.8779199719429016]

>>> model = models.Sequential()

>>> model.add(layers.Dense(16, activation='relu', input_shape=(10000,)))

>>> model.add(layers.Dense(16, activation='relu'))

>>> model.add(layers.Dense(1, activation='sigmoid'))

>>> model.compile(optimizer='rmsprop', loss='mse', metrics=['accuracy'])

>>> model.fit(x_train, y_train, epochs=4, batch_size=512)

Epoch 1/4

・・・・・

<keras.callbacks.callbacks.History object at 0x7f948bdcbb38>

>>> results = model.evaluate(x_test, y_test)

25000/25000 [==============================] - 1s 41us/step

>>> results

[0.08861505253911019, 0.8802400231361389]